Announcing Matrix XII: An $800M fund to invest from concept to Series A Read more

In my conversations with founders and other product people about generative AI and tools for imagination, one question comes up again and again: “What have you seen that’s interesting?” In context, what’s often meant is something more like: what are the exciting emergent interaction patterns? Our intuition is that generative AI makes mischief with all the bottlenecks in creativity, which should introduce new points of friction and opportunity. But it takes actually playing with the models and products built on them to glimpse the elegant, why-didn’t-I-think-of-that solutions ahead of us.

From the past few months of immersing myself in the landscape of generative AI products, here are five interaction patterns that have stuck with me.

Summoning spell

Lex is “a word processor with artificial intelligence baked in,” built by the team behind Every. In Nathan Baschez’s recounting of “How Lex Happened,” he shares “What I realized was special about Lex—and this realization only came gradually, over the course of a few weeks—was that it embodied a simple idea that somehow no one has exactly nailed yet: online word processing + gpt-3. That’s it!” Further, “If you want to use GPT-3 there are plenty of places to do it, including on OpenAI’s website directly. But nobody yet has an interface that’s a familiar full-featured word processor. Some people are close, but my intuition is they tend to make it too complicated.” The core AI-powered interaction happens at the text insertion point: “The AI wasn’t quite right at first, but we refined it to the simplest possible experience: just type +++, and GPT-3 will give you a few sentences it thinks might come next.”

Lex’s product insight has the ring of truth based on all that I know about word processors from working on one for five years. When typing is the main user action in an application, there’s power in letting people stay in the flow and do more at the text insertion point. You see this in slash commands in Notion and similar products, and in inline @mention insertion—a now-common pattern we helped introduce through Quip. (Try typing @ in Google Docs and it’ll bring up a menu of people you can mention, docs you can reference, and “building blocks” you can add in.) Typing “+++” in Lex is a sort of a “blah blah blah,” “etc. etc. etc.,” “dot dot dot” expression that feels totally natural in context. You’re stuck, you want to get unstuck, but the frustration is just below your consciousness—not something you’re ready to admit. So why not type a few pluses and see what happens? Definitely a why-didn’t-I-think-of-that moment.

(Thank you to Nathan for the Lex invite!)

Highlight as springboard

A summoning spell works great when you have a text insertion point to work with, but what about when you don’t? When reading text on a device, highlighting is a natural springboard to action: it’s how we select words to copy into another context, identify the text span in Google Docs that we’re planning to add a comment to, or simply augment the text with a formatting shift—italics, bold, underline, or a colorful highlight. Explainpaper and Readwise Reader are two products that take highlights to the next level as a springboard for generative action.

Explainpaper is delightful in its straightforward utility. I can select text almost absentmindedly—as with the “+++” command in Lex, this saves me from the pain of “admitting” that I’m stuck—and then a few seconds later the righthand pane fills up with helpful explanatory text. Having recently attempted the manual version of making myself look up every single unfamiliar-to-me term in a machine learning paper abstract as a way of deepening my own context before diving in to the paper itself, this is definitely a better experience. I especially appreciate how highlighting keeps me in context: the technical terms in question are domain-specific, so generally when I would open a new browser tab to look them up, I’d end up on a blog post rather than a dictionary entry—and soon enough, my browser window would be full of tiny tabs. With Explainpaper, GPT-3 knows enough about the domain and is good enough at summarization that I can get a solid definition (in this case, of “English constituency parsing”) without breaking focus.

As a Readwise user and fan for the past half-decade, I’ve been watching the development of Readwise Reader with great interest. Their plunge into generative is particularly thrilling for me because this is a pragmatic team, not a hype-driven one—so you know it’s got to be useful (or at least truly fun) to meet their standards. Joined by Simon Eskildsen for the quarter as a sort of reader-and-hacker-in-residence, the Readwise team came up with a command palette of 10 GPT-3-powered actions that use highlighted text as a springboard. This Twitter thread from Readwise cofounder Daniel Doyon offers a thorough overview of each of the commands, the thought process behind it, and what they’ve found it’s particularly good for. Of the Haiku command, shown in the screenshot above, Daniel says “For some odd reason, this prompt often achieves levels of compression exceeding more explicit prompts such as ‘summarize’ or ‘tl;dr’. I suppose it has something to do with the strict constraints learned by GPT-3.” And of the whimsical prompts in general, Daniel observes “We've included a handful of silly prompts with Ghostreader not because they're particularly useful, but to help folks learn how to ride this bicycle for the mind that is generative AI.” Something I find particularly exciting about generative AI in Readwise is that all the interface patterns they’ve built up around making highlights, adding annotations, and navigating and resurfacing past highlights pay off here: GPT-3’s response to each in-line prompt can be ephemeral or saved as a more durable annotation.

Generative drag-to-fill

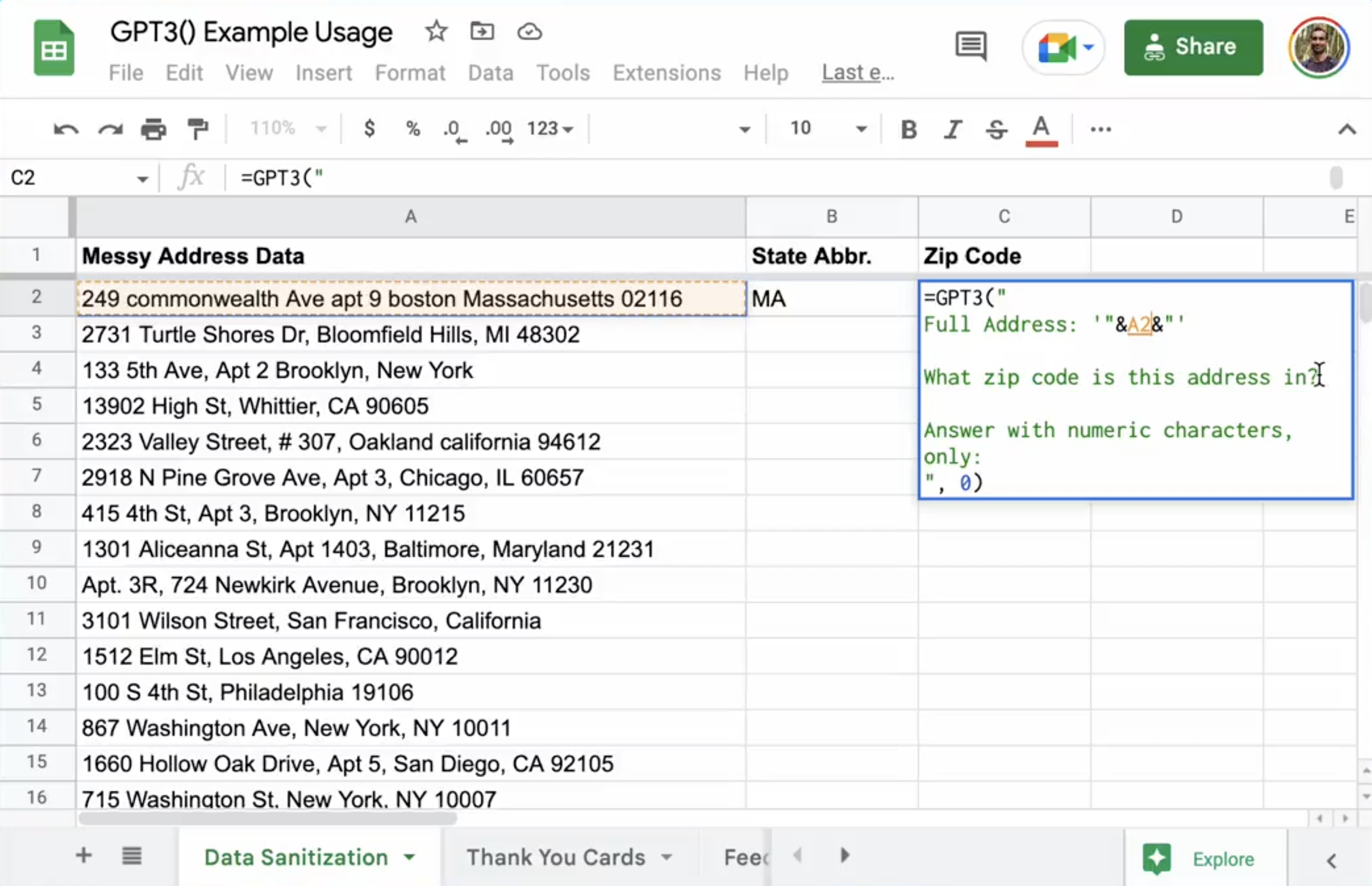

Spreadsheets have been called the original no-code platform for a reason: for millions of people around the world, spreadsheets are their tool of choice when they’re looking to solve a problem. With a “what you see is what you get” interface—drag the column to expand its width! Apply formatting inline!—backed by a deep formula library, spreadsheets remain popular because they balance flexibility and power.

One of my favorite spreadsheet features has long been drag-to-fill—it’s so satisfying to see a span of empty cells fill up with just the right values, with some elements being held constant and others varying in sensible ways. =GPT3(), a project shared by Shubhro Saha, shows the potential of generative models in the context of cleaning data and generating text en masse. While generative models are in general useful for streamlining repetitive tasks, seeing those tasks arrayed in a spreadsheet grid can be an aha moment: looking at Shubhro’s sample sheets calls up for me a visceral memory of all the spreadsheets I’ve filled out and cleaned up the hard way, and brings up the hope of generative prompting being the right balance of flexible enough and structured enough to solve many of those problems forevermore.

See also this 2020 example of GPT-3 operating in spreadsheet cells.

Generative wizards

It’s about time wizards made a comeback. From the Nielsen Norman Group:

A wizard is a step-by-step process that allows users to input information in a prescribed order and in which subsequent steps may depend on information entered in previous ones. Wizards usually involve multiple pages and are often (but not obligatorily) displayed in modal windows. One wizard page typically corresponds to a single step in a multistep process. As the user enters information, the system computes the appropriate path for that user and routes her accordingly. Wizards thus often have some branching logic behind the scenes, but the perceived user experience is that of a linear flow: one screen after another, and all the user has to do is to click “next.” (Or “back,” or “cancel,” but mainly the user keeps moving forward.)

My favorite example of a generative wizard that I’ve seen so far comes from Sudowrite, cofounded by a dear friend of mine (he’s the reason my husband and I met thirteen years ago). Sudowrite was way ahead of the curve on a number of these generative interface explorations—they’ve been experimenting and pushing the envelope for years at this point, so playing around in their app offers a window into a lot of exploration that’s unfolded iteratively over a long period of time. (Two Sudowrite features that deserve more attention: the “Twist” flow that helps users generate ideas for plot twists, and the “Poem” flow that composes a poem based on user inputs, paired with an image.) The screenshot sequence above is from their Brainstorm feature, and shows an enticing first screen asking “What do you want to brainstorm?” with visual tiles featuring options like “world building” and “plot points,” all illustrated in a fun and cohesive way. From there, the next screen asks for prompt inputs in a structured way appropriate for that brainstorming topic, then produces a screen full of ideas that can be voted up and down.

Wizards have particular value in generative AI applications. For one, generative AI can be overwhelming in its abundance—the same tools that could in theory help us storm the fortress of writer’s block sometimes spark writer’s block of their own when we’re faced with a blinking text insertion point in a prompt field. Wizards can help distill down the universe of possibilities in generative to a set you’re more likely to want, guiding you along a step-by-step journey to getting results. For another, there’s potential in generative AI to actually generate the options in the wizard—you could imagine a prompt backing a wizard screen that would have a number of visual, labeled tiles that asks one model for a set of 5-10 disparate words, then feeds each of those words into an image gen model with the instruction to make them all visually cohesive. Coming up with “the right options” for a wizard can be difficult even for the product managers and designers putting them together (I’ve tried), especially when you go deep into the decision tree and are trying to keep the options there at the same quality level.

(While we’re on the topic, this type of wizard is also due for a comeback.)

Prompt palette

Most applications for producing visual work have converged on the panel as an interface pattern. Across Adobe’s suite, for instance, floating panels organize the many, many controls on offer. Panels are especially good for keeping possibilities visually close at hand without occupying the valuable screen real estate in the center of the canvas.

Prompt.ist is “a magic sketchpad you can use with friends,” made by the team behind Facet—an AI-powered image editor. Where Facet features AI mainly as the engine behind commands, Prompt.ist puts prompt-based image generation front and center. Playing around with Prompt.ist, I was struck by how the familiar interface pattern of a floating panel felt so new when filled with a palette of prompts. I especially liked seeing the imagination applied to the prompts themselves: maybe AI came up with them, maybe the Facet team brainstormed a set of compelling prompt words in Slack, but in any case: glancing at the Prompt.ist prompt palette gives me the same thrill as seeing a whole fresh set of Copic markers in every color. This pattern creates a feeling of combinatorial abundance: the prompt ingredients themselves are evocative, but then by combining them, you get the feeling of something wholly new.

And that’s it—for now

I write to learn, so I hope you’ll reach out if you’ve seen any interesting generative interface patterns I haven’t shared here. And if you’re working on one that hasn’t seen the light of day yet, even better! You know where to find me—diana@matrixpartners.com.